Introduction

archive.pl lets you collect URLs in a text file and stores them in the Internet Archive. It fetches the documents itself and scraps some metadata in order to generate a link list in HTML that is suitable for posting it to a blog or as Atom feed. Windows users, who lack Perl on their machine, can obtain it as exe-file.

Requirements

There is an image on Docker Hub now. It is strongly recommended to prefer it over self-install or Windows binaries.

Perl 5.24 (earlier versions not tested but it is likely to work with every build that is capabale of getting the required modules installed). If there are issues with installing the XMLRPC::lite module, do it with CPAN’s notest pragma.

Usage

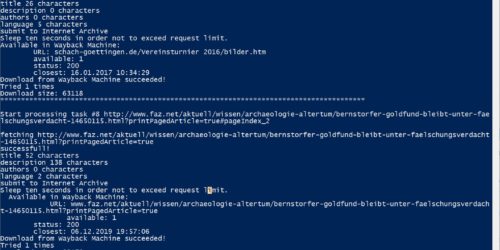

Collect URLs you want to archive in file urls.txt separated by line breaks and UTF-8-encoded and call perl archive.pl without arguments. The script does to things: it fetches the URLs and extracts some metadata (works with HTML and PDF). It submits them to Internet Archive or use autmoated submission to WordPress. Then it generates a HTML file with a link list that you may post to your blog. Alternatively you can get the link list as Atom feed. Additionally you can post the links on Twitter. Regardless of the format you can upload the file on a server via FTP. If the archived URL points to an image, a thumbnail is viewed in the output file.

Internet Archive has a submission limit of 15 URLs per minute per IP. Set an appropriate delay (at least five seconds) to meet it. If you want to be faster, set a proxy server which rotates IPs. This is fi. possible with TOR as service (the TOR browser on Windows does not work here!). Set MaxCircuitDirtiness 10 in the configuration file (/path/to/torrc) to rotate IPs every ten seconds.

Optional parameters available:

-a output as Atom feed instead of HTML -c <creator> name of feed creator (feed only) -d <path> FTP path -D Debug mode - don't save to Internet Archive -f <filename> name of input file if other than `urls.txt` -F Download root URLs through Firefox (recommended) -h Show commands -i <title> Feed or HTML title -k <consumer key> *deprecated* -n <username> FTP or WordPress user -o <host> FTP host -p <password> FTP or WordPress password -P <proxy> A proxy, fi. socks4://localhost:9050 for TOR service -r Obey robots.txt -s Save feed in Wayback machine (feed only) -t <access token> *deprecated* -T <seconds> delay per URL in seconds to respect IA's request limit -u <URL> Feed or WordPress (xmlrpc.php) URL -v version info -w *deprecated* -x <secret consumer key> *deprecated* -y <secret access token> *deprecated* -z <time zone> Time zone (WordPress only)

Changelog

v2.3

- Removed the ability to post URLs on Twitter due to Twitter API changes.

- Removed the ability to store Twitter images in various sizes because Twitter has abandoned the corresponding markup since long.

- Blacklist extended.

v2.2

- Blacklist of URLs (mostly social media sharing URLs which only point to a login mask).

- Docker image on Docker Hub.

- Fixed image data URLs bug.

- Removed TLS from Wayback URLs (too many protocol errors).

- Added -F option which downloads root URLs through Firefox to circumvent DDoS protection etc.

- Added mirror.pl.

v2.1

- Introduced option -P to connect to a proxy server that can rotate IPs (fi. TOR).

- User agent bug in LWP::UserAgent constructor call fixed.

- -T can operate with floats.

- Screen logging enhanced (total execution time and total number of links).

- IA JSON parsing more reliable.

v2.0

- Script can save all linked URLs, too (IA has restricted this service to logged-in users running JavaScript).

- Debug mode (does not save to IA).

- WordPress bug fixed (8-Bit ASCII in text lead to database error).

- Ampersand bug in ‘URL available’ request fixed.

- Trim metadata.

- Disregard robots.txt by default.

v1.8

- Post HTML outfile to WordPress

- Wayback machine saves all documents linked in the URL if it is HTML (Windows only).

- Time delay between processing of URLs because Internet Archive set up a request limit.

- Version and help switches.

v1.7

- Tweet URLs.

- Enhanced handling of PDF metadata.

- Always save biggest Twitter image.

v1.6

Not published.

v1.5

- Supports wget and PowerShell (w flag).

- Displays the closest Wayback copy date.

- Better URL parsing.

- Windows executable only 64 bit since not all modules install properly on 32.

v1.4

- Enhanced metadata scraping.

- Archive images from Twitter in different sizes.

- Added project page link to outfile.

- Remove UTF-8 BOM from infile.

- User agent avoids strings archiv and wayback.

- Internet Archive via TLS URL.

- Thumbnail if URL points to an image.

v1.3

- Debugging messages removed.

- Archive.Org URL changed.

v1.2

- Internationalized domain names (IDN) allowed in URLs.

- Blank spaces allowed in URLs.

- URL list must be in UTF-8 now!

- Only line breaks allowed as list separator in URL list.

v1.1

- Added workaround for Windows ampersand bug in Browser::Open (ticket on CPAN).

License

Copyright © 2015–2022 Ingram Braun

GPL 3 or higher.

Download

- sources

- exe for Windows 64 bit (XP or higher)

- exe for Windows 32 bit (XP or higher) discontinued!

Best ist to use Docker:

or clone it from GitHub:

Leave a Reply